A recent report from Citi Research’s Peter Lee looks at the impact of AI on the memory chip industry.

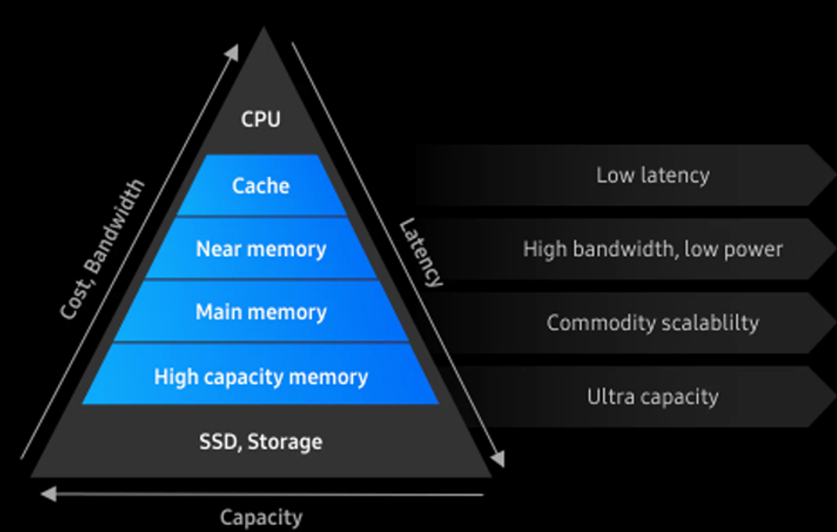

Memory isn’t what it used to be. AI demand in the memory industry is fundamentally altering the sector’s product landscape.

AI data processing requires parallel and simultaneous computation of large data, leading to the need to modify products to minimize data bottleneck issues.

Figure 1. New Memory Hierarchy

Source: Samsung, Citi Research

Citi research analysts expect in-memory computing will be more widely adopted in the future. While most of the AI data processing will continue to be handled by AI servers, they say AI data processing at the edge level will also be needed to improve efficiency of overall AI data processing, which will result in increased demand for on-device AI.

They identify three areas of AI demand surge that should result in three unique directions in the memory space;

[1] AI Super Computing for training and inference of LLM (Large Language Model),

[2] On-device AI to partially handle AI processing workloads at the edge device level, and

[3] Memory Pooling, in which memory is shared to improve feeding efficiency of high-capacity data.

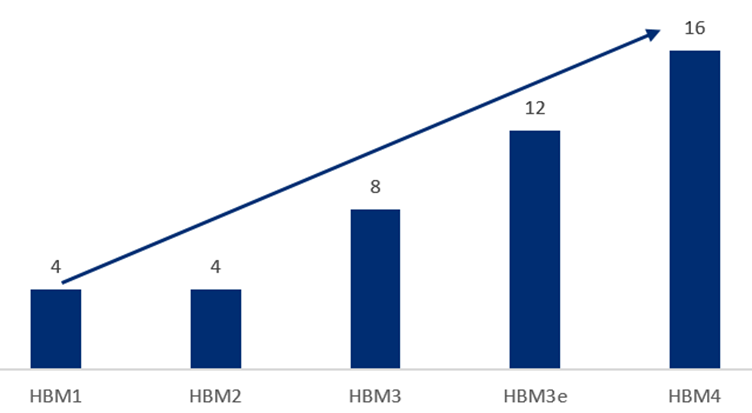

Direction 1: Broader take up of High Bandwidth Memory (HBM) and Multi-Ranked Buffered Dual In-Line Memory Module (MRDIMM) for AI Super Computing.

The first direction Citi Research analysts see is wider adoption of HBM and MRDIMM to boost memory bandwidth. HBM adoption, they said, is already well underway.

Figure 2. DRAM Density (GB) by HBM Chip

Source: Citi Research.

Direction 2: Memory product diversification for On Device AI

The On Device AI trend will lead to diversification in memory products, Citi Research analysts say.

While most of the heavy-load AI computing tasks will still be carried out on centralized servers, if basic AI tasks are processed by smartphones or PCs, AI servers can process the remaining tasks faster and more accurately.

Direction 3: CXL for In-Memory Computing

The third direction Citi analysts predict is for In-Memory Computing to jointly process large amounts of data with other systems. As part of this, the analysts say they see the adoption of Compute Express Link (CXL), which will allow memory sharing by various systems. CXL adoption could begin from 2H24E and dramatically improve data processing efficiency.

CXL is designed to better use the accelerator, memory, and storage device used with the CPU in a high-performance computing system.

The report goes on to look at expectations that memory products become semi-customized as AI memory products lead to increased product diversification and complexity going forward.

As such, the report says, the memory market will likely develop in the direction of higher product optimization in order to meet customer needs.

As such, the report says, the memory market will likely develop in the direction of higher product optimization in order to meet customer needs.

For more information on this subject, if you are a Velocity subscriber, please see the full report here Global Semiconductors - Diversified AI Product Demand to Shift Memory Market Toward Semi-Customization (Non-Commodity) (1 Jan 2024)

Citi Global Insights (CGI) is Citi’s premier non-independent thought leadership curation. It is not investment research; however, it may contain thematic content previously expressed in an Independent Research report. For the full CGI disclosure, click here.